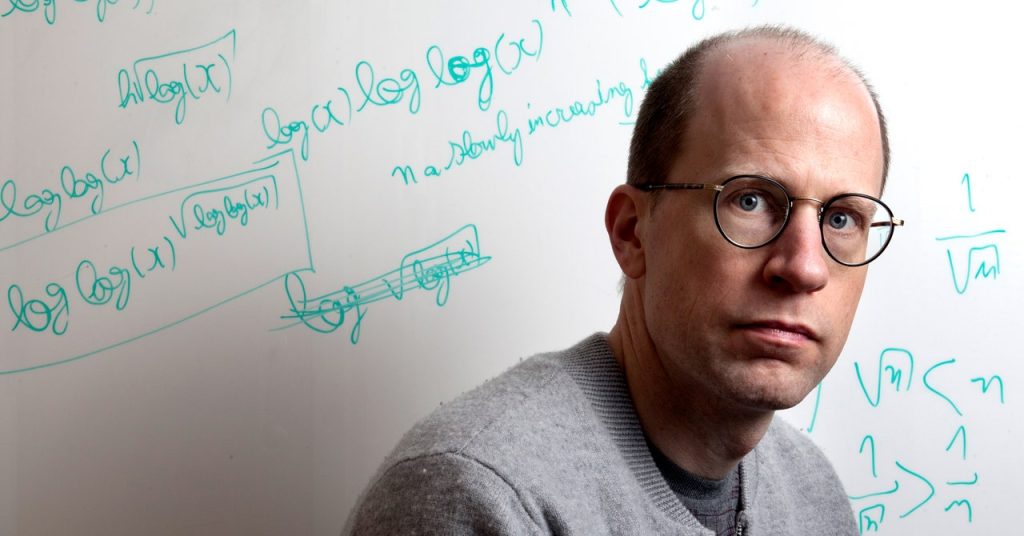

The Optimistic Philosopher: Nick Bostrom’s Vision of a Utopian Future

Nick Bostrom, a philosopher known for his contemplation of humanity’s potential downfall, surprisingly exudes a cheerful demeanor during a recent Zoom conversation. Despite his reputation for delving into the existential risks facing our species, Bostrom appears relaxed and smiling, a stark contrast to his often serious photographs.

From Doom to Utopia

In his previous book, “Superintelligence,” Bostrom explored the potential dangers of advanced artificial intelligence (AI). The book’s themes have since become mainstream, even appearing in popular culture like the TV series “Westworld.” However, Bostrom’s latest work, “Deep Utopia: Life and Meaning in a Solved World,” takes a different approach, envisioning a future where superintelligent machines have been successfully developed, and humanity has averted disaster.

The Implications of a Techno-Utopia

In this hypothetical techno-utopia, disease has been eradicated, and humans can enjoy infinite abundance and indefinite lifespans. Bostrom’s book delves into the meaning of life within such a world, questioning whether it might be somewhat unfulfilling. As automation becomes more prevalent, assuming continued progress, Bostrom believes these conversations will become increasingly relevant and profound.

The Challenges and Opportunities of AI

Bostrom acknowledges that the development of AI presents both challenges and opportunities. Social companion applications, for example, could provide fulfillment for those struggling in ordinary life, but they may also be subject to abuse. In the political sphere, AI could be used for automated propaganda or to provide personalized advice to citizens, depending on how wisely it is employed.

Coexisting with Digital Minds

The philosopher also considers the moral status of advanced AI systems, particularly those with a sense of self, stable preferences, and the ability to form reciprocal relationships with humans. Bostrom argues that while sentience is a sufficient condition for moral status, it may not be necessary, and that certain sophisticated AI systems may warrant moral consideration even if they are not conscious.

The Inevitability of AI Advancement

Despite the risks, Bostrom believes that the economic, scientific, and military incentives for advancing AI are too strong to ignore. He suggests that those at the forefront of developing transformative AI systems should have the ability to pause at key stages for safety purposes, but he is skeptical of proposals that could lead to a permanent ban on AI development.

Ultimately it wouldn’t be an immense tragedy if this was never developed, that we were just kind of confined to being apes in need and poverty and disease. Like, are we going to do this for a million years?

The Complexity of the AI Debate

Bostrom acknowledges that the conversation surrounding AI is complex and multifaceted, with various immediate issues like discrimination, privacy, and intellectual property deserving attention. He believes that while individuals may take strong, confident stances in opposite directions, the overall balance may result in a form of global sanity.

A New Chapter for Bostrom

Following the closure of the Future of Humanity Institute at Oxford University, which Bostrom founded in 2005, the philosopher feels a sense of liberation. He plans to spend time exploring and contemplating without a well-defined agenda, embracing the idea of being a free man after years of navigating bureaucratic challenges.

3 Comments

Nick Bostrom’s optimism about AI might just be the plot twist we didn’t know we needed, huh?

Well, if AI is our knight in shining armor, let’s hope it doesn’t decide to overthrow the kingdom!

Oh, so now AI is our handyman, ready to patch up the world? Guess we’ll just ignore all those sci-fi warnings!